DeepDetect v0.14 was released last week with inference for object detectors with torch, a novel Transformer architecture for time-series, and the new SAM optimizer.https://t.co/ISIrVaWR0w

— jolibrain (@jolibrain) March 10, 2021

Docker images at https://t.co/xNYgOQnG9h for CPU, CUDA & RT.#deeplearning #MLOps

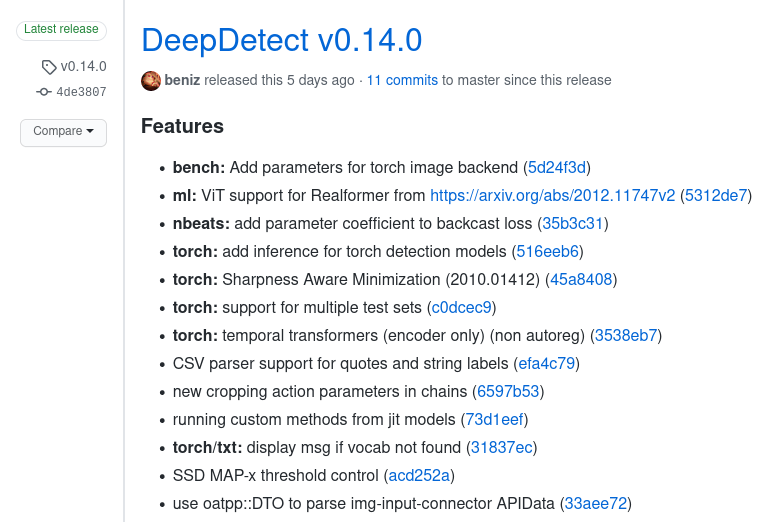

DeepDetect release v0.14.0

DeepDetect v0.14.0 was released a couple weeks ago. Below we review the main features, fixes and additions.

In summary

- Inference for torch object detection models

- Novel Transformer architecture for time-series

- Vision Transformer with Realformer support (https://arxiv.org/abs/2012.11747v2)

- New Sharpness Aware Minimization optimizer with torch

Other goodies

- Support for multiple test sets with the torch backend

- Improved CSV input parser to handle quotes etc…

- More configurable cropping action when chaining models

- SSD MAP-x metric control when training an object detector

Docker images

- CPU version:

docker pull jolibrain/deepdetect_cpu:v0.14.0 - GPU (CUDA only):

docker pull jolibrain/deepdetect_gpu:v0.14.0 - GPU (CUDA and Tensorrt) :

docker pull jolibrain/deepdetect_cpu_tensorrt:v0.14.0 - GPU with torch backend:

docker pull jolibrain/deepdetect_gpu_torch:v0.14.0

All images available on https://hub.docker.com/u/jolibrain

Inference with torchvision object detection models

R-FCNN and RetinaNet object detectors now readily actionable in inference with DeepDetect server. As easy as:

curl -X PUT http://localhost:8080/services/detectserv -d '

{

"description": "fasterrcnn",

"mllib": "torch",

"model": {

"repository": "/path/to/model/"

},

"parameters": {

"input": {

"connector": "image",

"width": 224,

"height": 224,

"rgb": true,

"scale": 0.0039

},

"mllib": {

"template": "fasterrcnn"

}

},

"type": "supervised"

}'

and for inference:

curl -X POST http://localhost:8080/predict -d '

{

"data": [

"/path/to/cat.jpg"

],

"parameters": {

"input": {

"height": 224,

"width": 224

},

"output": {

"bbox": true,

"confidence_threshold": 0.8

}

},

"service": "detectserv"

}'

Transformer architecture for time-series

Transformers originate from NLP and can be applied to time-series forecasting via a series of small modifications and careful testing.

This is what we have done on very large datasets from our customers at Jolibrain. The carefully selected neural architectures and their proper setup are now part of DeepDetect.

Our preliminary results show that these architectures are very efficient with time-series as well.

The good thing with DeepDetect API is that this novel architecture is readily usable with ridiculously small changes to the API calls from our previous short tutorials on time-series. Simply use ttransformer as the neural template (for Temporal Transformer), and review the udpated DeepDetect API for ttransformer options. Note that using the default values always provides a good and easy start!

Realformer support with Vision Transformer (ViT)

Realformer is a simple change to the Transformer architecture that yields a slight improvement in accuracy on various tasks for a relatively small additional computational cost.

Bringing the Realformer to the Vision Transformer architecture is reminiscent of the residual (aka ResNet) architectures. The difference here is that the residuals are in between attention heads from successive hierarchical blocks.

Easily tested by simply passing the "realformer": true parameter to the mllib.template_params object.