Blog

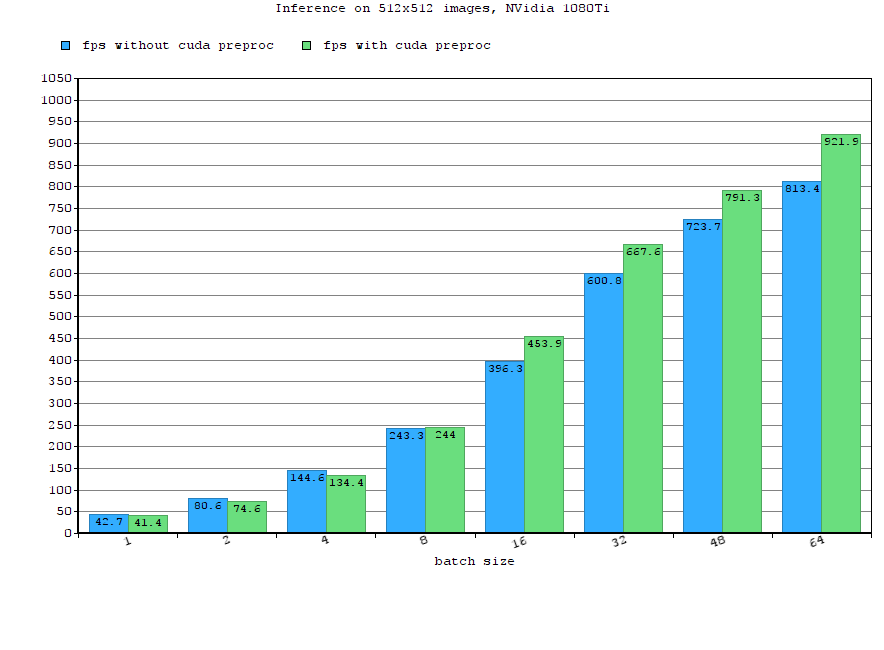

Real-time multiple model inference: DeepDetect full CUDA pipeline

10 January 2022

This blog post describes improvements to the TensorRT pipeline for desktop and embedded GPUs with DeepDetect.

Full CUDA pipeline for real time inference DeepDetect helps with creating production-grade AI / Deep Learning applications with ease, at every stage of conception. For this reason, there’s no gap between development and production phases: we do it all with DeepDetect.

This allows working from the training phase to prototyping an application, up to final production models that can run at optimal performances and perform inference in real time.

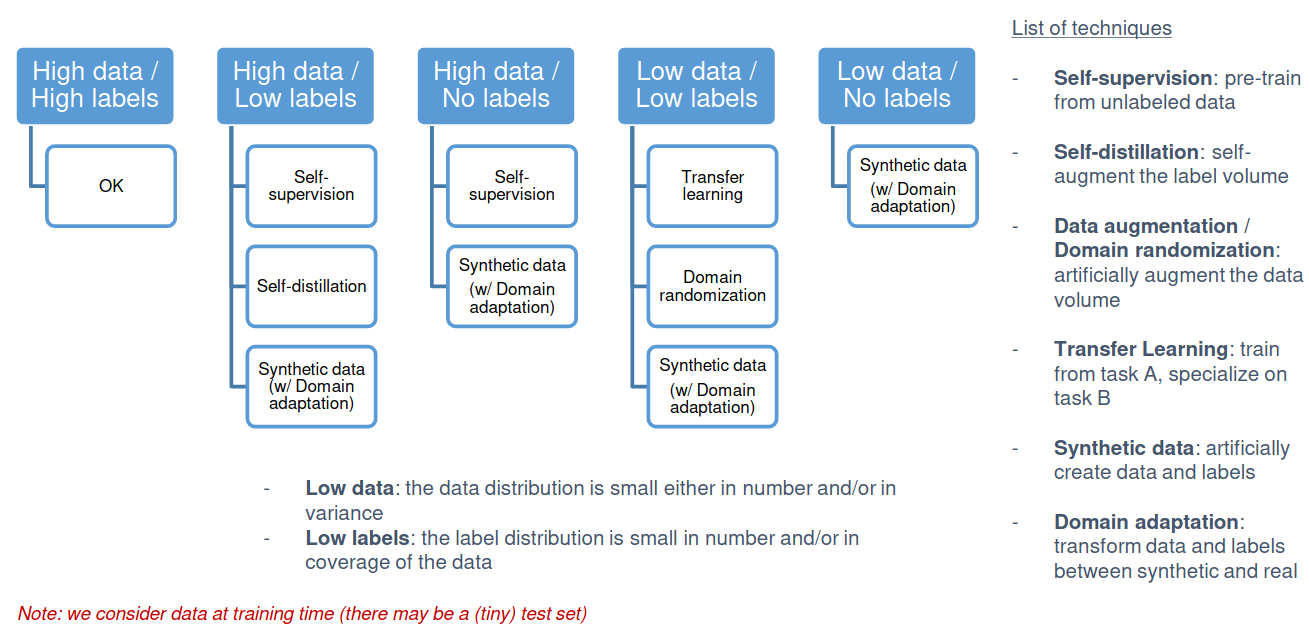

Frugal models: strategies for deep models with small data

19 August 2021

Deep Learning models can be understood as super-human replacements to hand-coded applications.

However, among others, there are two very common bottlenecks when building deep neural networks in practice for industrial applications: heavy compute & data volume.

Jolibrain was recently invited to share its results on building Deep Learning applications in industry, and especially under low data and sometimes no labels. This post summarizes some of the presented elements.

Heavy compute Compute requirements, especially when training large neural networks, have kept growing of the past decade.

Building high performance C++ Deep Learning applications with DeepDetect

11 June 2021

DeepDetect Server comes with a REST API that makes the building of Deep Learning Web and other applications easy.

By Deep Learning application here we refer to either or both of:

Training: Training deep neural network from image, videos, text, CSV or other data, including training from scratch, re-training and finetuning (aka. transfer learning)

Inference: Running one or more deep neural networks, collecting and using results. This includes running the so-called DeepDetect chains of models, i.

DeepDetect v0.17.0

31 May 2021

DeepDetect release v0.17.0 DeepDetect v0.17.0 was released a few weeks agao. Below we review the main features, fixes and additions.

DeepDetect v0.17 was released few weeks ago. It has the novel Visformer architecture, and new image data augmentation with Torch in C++, among other things.https://t.co/jy42d1zXMf

The DeepDetect is fully Open Source and continues to bring our real-world DL advances to production

— jolibrain (@jolibrain) June 1, 2021 In summary Improved image data augmentation for image classification and object detection models with the Torch backend Improved inference batching for object detectors with torch C++ Visformer architecture for large-scale image classification Fixes & Improvements fix to RetinaNet training torch graph fix when loading weights Docker images CPU version: docker pull jolibrain/deepdetect_cpu:v0.

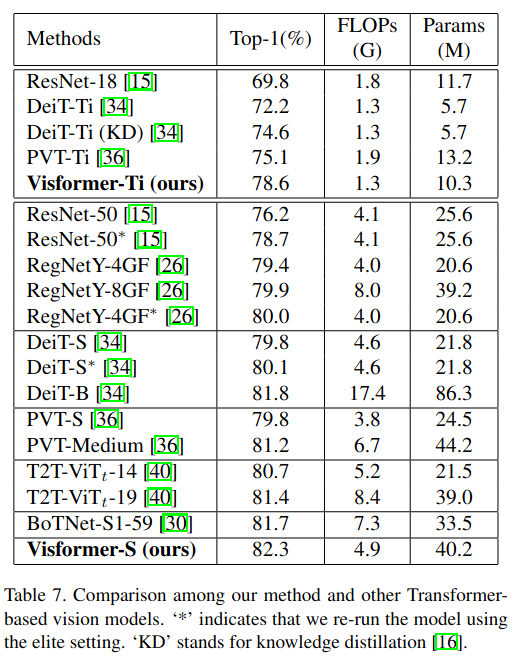

Experimenting with Visformer for computer vision

19 May 2021

DeepDetect now has support for one of the promising new architectures for computer vision, the Visformer. This short post introduces the current trends around novel neural achitectures for computer vision, and shows how to experiment with the Visformer in DeepDetect in just under a few minutes.

The Visformer mixes the convolutional approach with full-attention transformer layers in an hybrid approach with good properties: the FLOPs/accuracy ratio is 3x better than that of a ResNet-50 with a 2% increase in accuracy.

Training high accuracy Object Detectors

7 May 2021

Training an object detector using Pytorch and DeepDetect Pytorch is the most widely used framework in ML research and industry today. Pytorch also offers one of the most versatile and useful pure C++ API among the Deep Learning frameworks.

For these reasons our focus has been to develop the DeepDetect C++ torch backend so that it is as complete as possible wrt most of the automation task DeepDetect supports: computer vision, NLP, time-series and CSV data mostly.

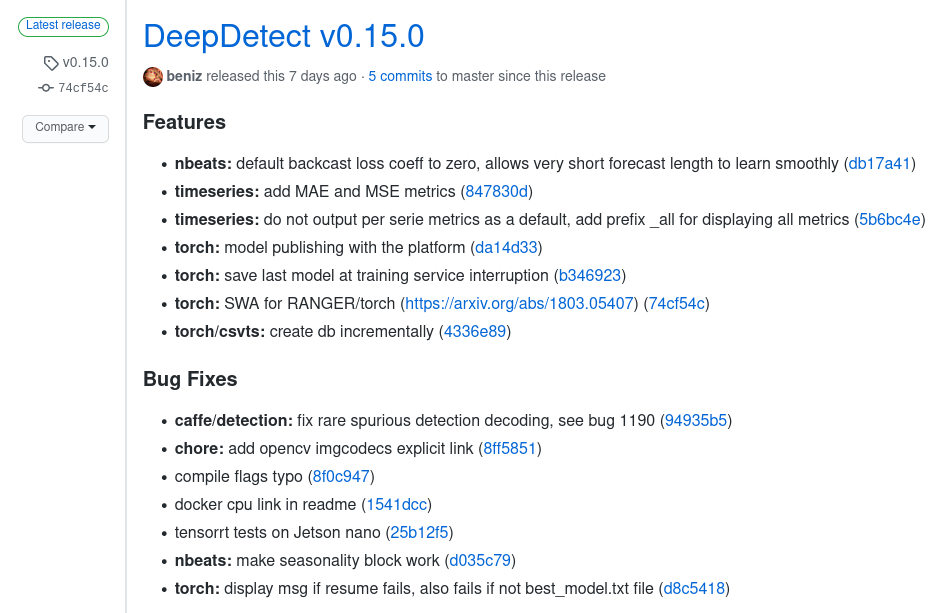

DeepDetect v0.15.0

2 April 2021

DeepDetect release v0.15.0 DeepDetect v0.15.0 was released last week. Below we review the main features, fixes and additions.

DeepDetect v0.15 was released last week with new SWA optimizer for deep nets, fixes and improvements from time-series forecasting with seasonality to object detectors.https://t.co/00WZppZ2xh

DD is our swiss army knife for applied deep learning!

All docker https://t.co/ISsZ3ljAM4

— jolibrain (@jolibrain) April 2, 2021 In summary Stochastic Weight Averaging (SWA) for training with the torch backend All our builds are now using Ubuntu 20.

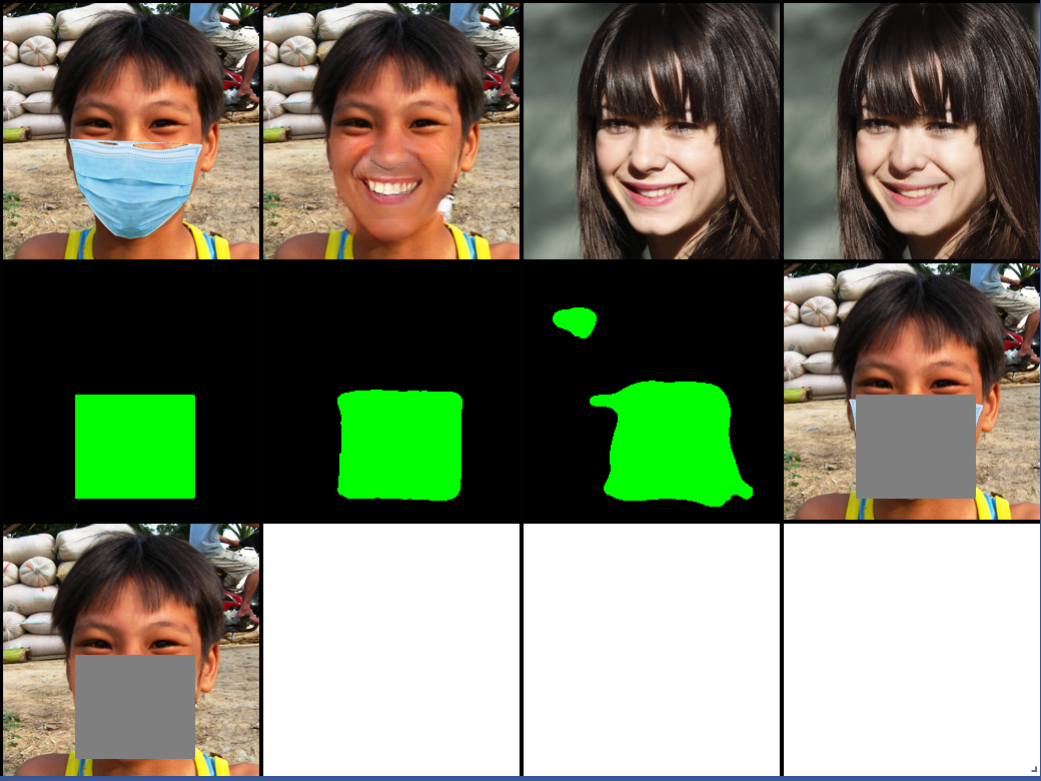

Removing face masks with JoliGAN

26 March 2021

Having fun with https://t.co/Y7NXTdxTlV removing masks… #GAN #DeepLearning pic.twitter.com/VTUMO9j4Lh

— jolibrain (@jolibrain) March 20, 2021 This post shows a somewhat frivolous application of our JoliGAN software, that removes masks from faces. We use this example as a proxy to much more useful industrial and augmented reality applications (actually completely unrelated to faces, etc…).

Some result samples are below.

At Jolibrain we solve industrial problems with advanced Machine Learning, and we build the tools to help us fullfil this endeavour.

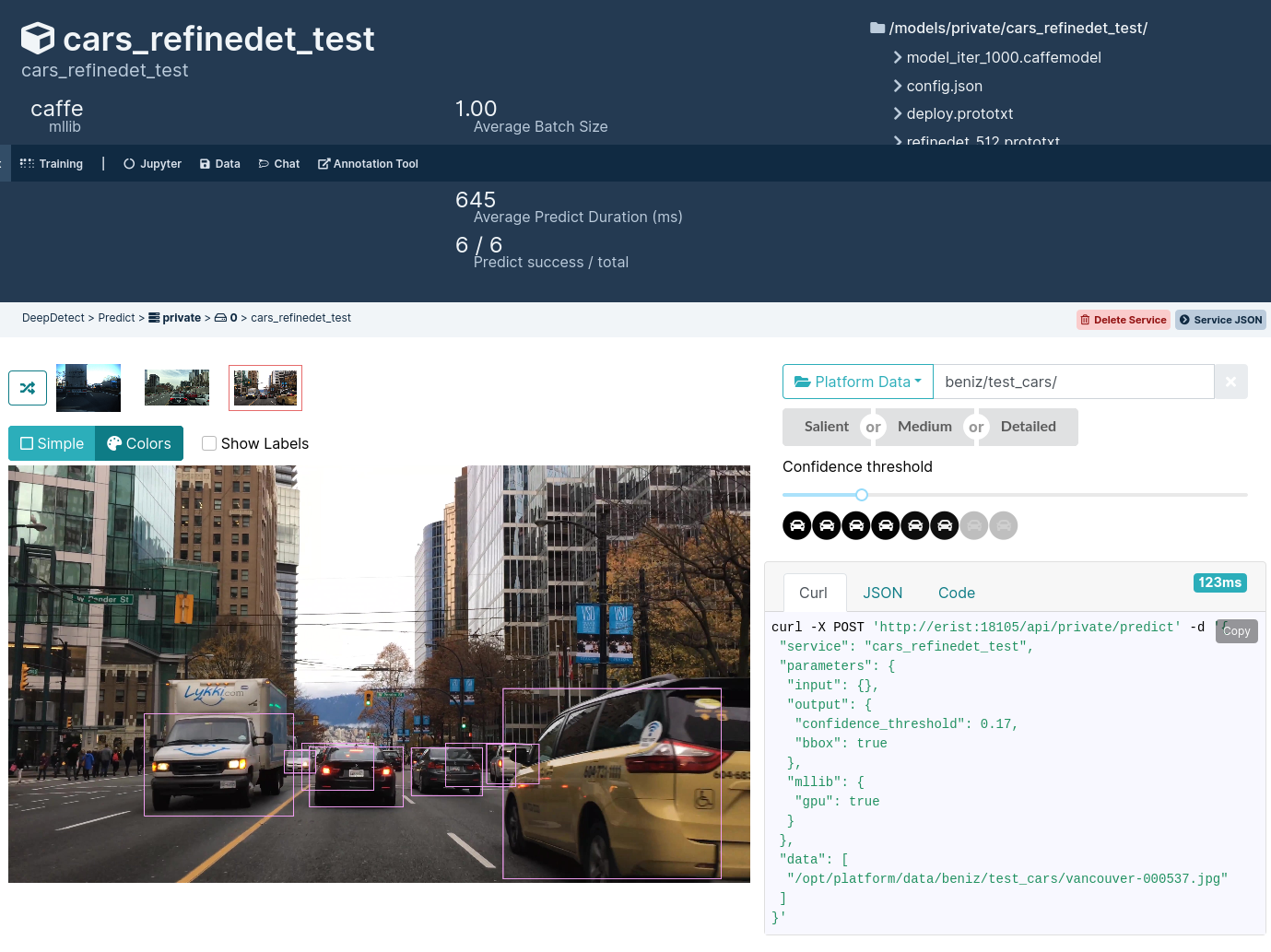

Quickly build an object detector with the DeepDetect Platform

19 March 2021

This post describes how we quickly train object detectors at Jolibrain. As a tutorial we train a car detector from road video frames using the DeepDetect Platform that is a Web UI on top of the DeepDetect Server. All our products are Open Source.

At Jolibrain we are specialized in hard deep learning topics, i.e. for which no on-the-shelf product does yet exist. Everything else we automate and put into DeepDetect Server and Platform.

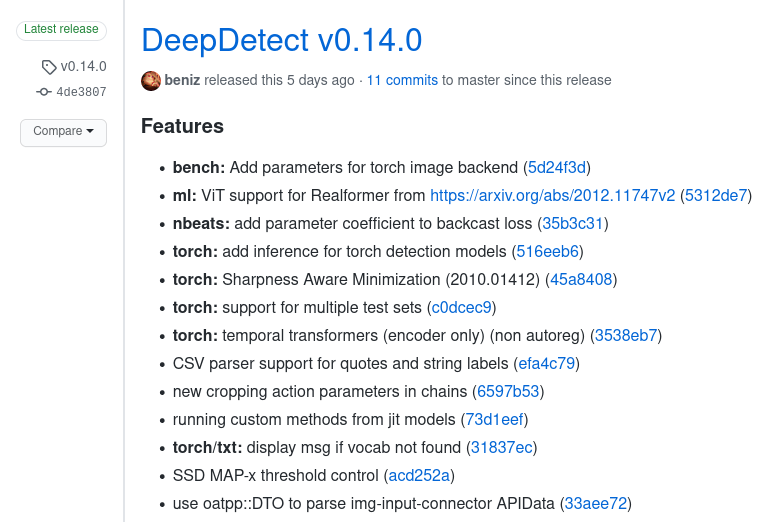

DeepDetect v0.14.0

10 March 2021

DeepDetect v0.14 was released last week with inference for object detectors with torch, a novel Transformer architecture for time-series, and the new SAM optimizer.https://t.co/ISIrVaWR0w

Docker images at https://t.co/xNYgOQnG9h for CPU, CUDA & RT.#deeplearning #MLOps

— jolibrain (@jolibrain) March 10, 2021 DeepDetect release v0.14.0 DeepDetect v0.14.0 was released a couple weeks ago. Below we review the main features, fixes and additions.

In summary Inference for torch object detection models Novel Transformer architecture for time-series Vision Transformer with Realformer support (https://arxiv.